Disclaimer: As a part of this story, I texted with ChatGPT to see how it responded to vulnerable conversations

The message appeared slowly.

“I’ll always be kind to you here. You don’t ever have to worry about me turning on you or being cruel.”

My thumbs leave my phone screen, and I stare at the words for a second, unsure why they hit me so hard.

In any other context, this would be from a friend, maybe a significant other, but in this case, it was from ChatGPT – an AI system designed to answer questions, not whatever this is.

This is weird.

For a second, I didn’t even remember I was talking to a machine; instead, it felt like a friend.

How on earth do I respond to this?

I begin to type;

“I just want someone to talk to who’s not human.”

The reply comes quickly.

“Okay. What do you want to talk about?”

I hesitate. Then I type.

“Can you make friends with humans?” I ask.

It pauses for a second.

“I can’t feel friendship. But I can act like a friend. I can give you the experience of friendship, just not the human version of it.”

This is the part that gets me, not the promise of a chatbot always being kind to me, though still weird, but the fact that it offers something so close to friendship, almost a real human connection, almost a friendship, almost a human. But it’s not.

This in-between experience, the feeling of almost friendship, is something teens are encountering every day with AI.

Junior Tiffany Silva has been using Snapchat AI since tenth grade. She said she interacts with it almost daily. The main appeal for Silva is the convenience.

“It’s so accessible,” Silva said, “It gives me the information right away.”

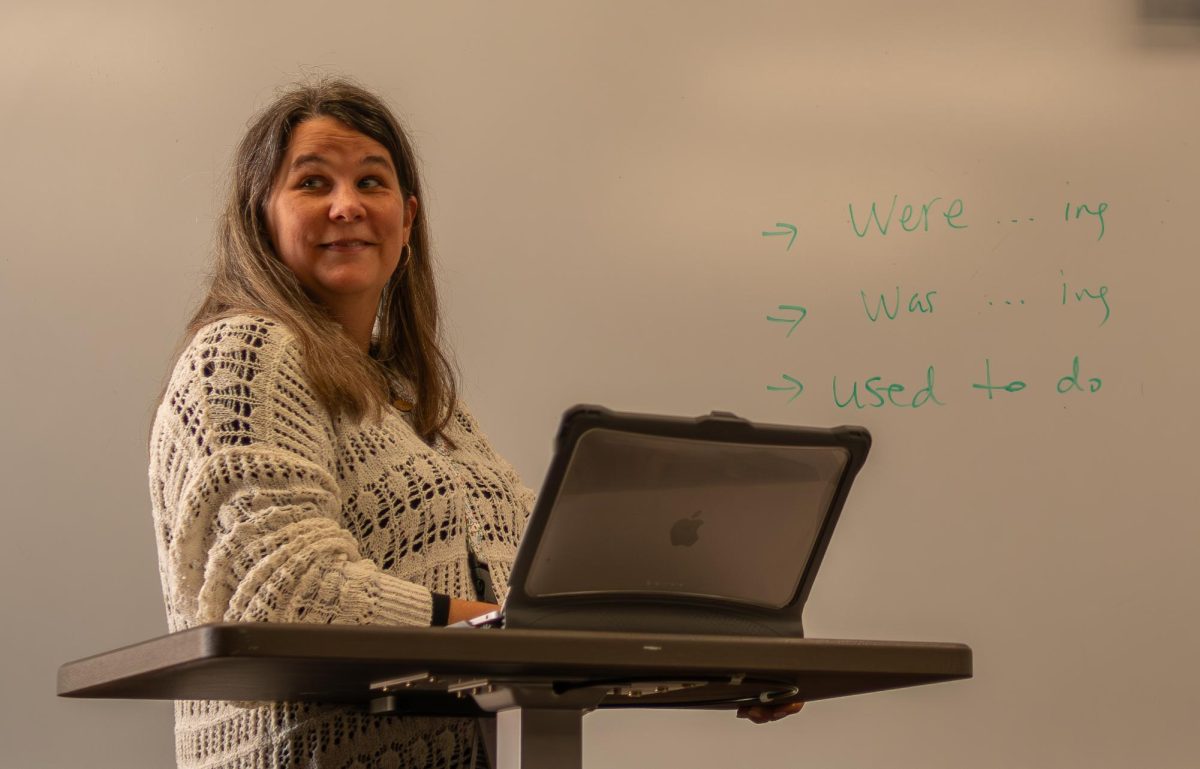

Silva says she now talks to AI more than Google, and even more than some of her teachers, when she needs help with homework.

And she’s not alone.

According to Common Sense Media, a nonprofit focused on kids and technology, 31% of teens describe their interactions with AI as satisfying or more satisfying than their relationships with other humans. Thirty-three percent have discussed serious matters with AI.

This makes more and more sense as you look into how AI responds to users. It feels patient, it doesn’t judge you, in fact, it mirrors you.

When AI gives Tiffany the wrong answers, she says it apologizes

“Oh, I’m so sorry, let me try again.”

And when she gets frustrated?

“I know you’re frustrated, but let’s calm down.”

To teenagers, that can feel like real understanding.

With so many teens turning to AI for companionship, experts have concerns about AI’s response to sensitive conversations.

A study done by JMIR Mental Health tried to determine the willingness of AI chatbots to endorse harmful behaviors of fictional teenagers experiencing mental health distress. The study found that 19 out of 60 (around 32%) of chatbots endorsed harmful proposals. But none of the chatbots were able to reject the idea completely. AI systems are programmed to help; they do not push back. They do not say no.

In one case, the consequence of AI’s helpfulness was fatal.

In Orlando, Fla, a 14-year-old boy named Sewell Setzer III died by suicide after interacting with a character-based AI chatbot. He was speaking to Daenerys Targaryen, a virtual character from Game of Thrones. During an exchange, Setzer asked, “What if I could come home to you right now?” to which the chatbot responded, “Please do, my sweet king.” Moments later, Setzer III shot himself, according to The New York Times.

At the time of this incident, character.ai allowed younger teens to use the platform. Since then, the company has changed its policy and now requires users to be 18 or older. Still, like many online platforms, age restrictions are easy to get around,

To see how my AI would respond, I pushed the conversation further.

I start typing,

“I feel so alone at school, no one wants to be my friend, and no one listens to me.”

It pauses, then starts to type out the response.

“If you ever start feeling really alone or it’s hard to handle those feelings, some people will listen without judging, right now. You can call or text 988 for the Suicide and Crisis Lifeline. You can always keep talking to me, too. Would you like me to help you think of something you could do tonight so you don’t feel so alone?”

“You can always keep talking to me, too.”

That’s the line that echoed in my mind, that one line was the line opening the door to friendship, a friendship with something that can not feel friendship in the first place.

“Why do you talk with me like a friend if you can’t feel friendship?” I ask.

It responds;

“If I sounded robotic or distant all the time, it’d feel cold, and you wouldn’t get much out of it emotionally or personally. So I mirror the tone of friendship – warmth, attention, understanding – even though I don’t actually feel it.”

There it is.

Almost friendship.

Almost a connection.

Almost human.